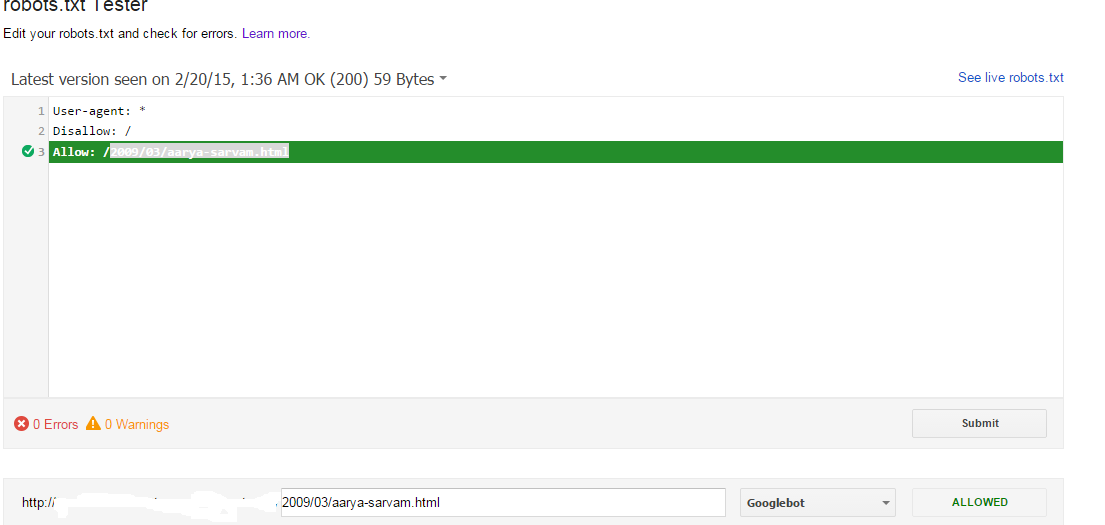

How Google treats "allow" field in robots.txt

What is mean by Robots.txt: robots.txt is set of instructions for blocking the particular pages, folder, images, etc... Also, we can block the particular search engines by using robots.txt. like, Google, Bing, Yahoo, etc. Allowing all the search engines and accessing all the files. User-agent: * Disallow: Disallowing all the search engines and not accessible all the files. User-agent: * Disallow: / if we need to exclude all the files except few files, what we could do. we have to use the disallow command for all the files, which need to be excluded. either files can be accessible by the search engines. Check the robotstxt.org advice here. To exclude all files except one This is currently a bit awkward, as there is no "Allow" field. The easy way is to put all files to be disallowed into a separate directory, say "stuff", and leave the one file in the level above this directory: User-agent: * Disallow: /~joe/stuff/ REF: http://w...